\(\)

\(%\newcommand{\CLRS}{\href{https://mitpress.mit.edu/books/introduction-algorithms}{Cormen et al}}\)

\(\newcommand{\CLRS}{\href{https://mitpress.mit.edu/books/introduction-algorithms}{Introduction to Algorithms, 3rd ed.} (\href{http://libproxy.aalto.fi/login?url=http://site.ebrary.com/lib/aalto/Doc?id=10397652}{online via Aalto lib})}\)

\(\newcommand{\SW}{\href{http://algs4.cs.princeton.edu/home/}{Algorithms, 4th ed.}}\)

\(%\newcommand{\SW}{\href{http://algs4.cs.princeton.edu/home/}{Sedgewick and Wayne}}\)

\(\)

\(\newcommand{\Java}{\href{http://java.com/en/}{Java}}\)

\(\newcommand{\Python}{\href{https://www.python.org/}{Python}}\)

\(\newcommand{\CPP}{\href{http://www.cplusplus.com/}{C++}}\)

\(\newcommand{\ST}[1]{{\Blue{\textsf{#1}}}}\)

\(\newcommand{\PseudoCode}[1]{{\color{blue}\textsf{#1}}}\)

\(\)

\(%\newcommand{\subheading}[1]{\textbf{\large\color{aaltodgreen}#1}}\)

\(\newcommand{\subheading}[1]{\large{\usebeamercolor[fg]{frametitle} #1}}\)

\(\)

\(\newcommand{\Blue}[1]{{\color{flagblue}#1}}\)

\(\newcommand{\Red}[1]{{\color{aaltored}#1}}\)

\(\newcommand{\Emph}[1]{\emph{\color{flagblue}#1}}\)

\(\)

\(\newcommand{\Engl}[1]{({\em engl.}\ #1)}\)

\(\)

\(\newcommand{\Pointer}{\raisebox{-1ex}{\huge\ding{43}}\ }\)

\(\)

\(\newcommand{\Set}[1]{\{#1\}}\)

\(\newcommand{\Setdef}[2]{\{{#1}\mid{#2}\}}\)

\(\newcommand{\PSet}[1]{\mathcal{P}(#1)}\)

\(\newcommand{\Card}[1]{{\vert{#1}\vert}}\)

\(\newcommand{\Tuple}[1]{(#1)}\)

\(\newcommand{\Implies}{\Rightarrow}\)

\(\newcommand{\Reals}{\mathbb{R}}\)

\(\newcommand{\Seq}[1]{(#1)}\)

\(\newcommand{\Arr}[1]{[#1]}\)

\(\newcommand{\Floor}[1]{{\lfloor{#1}\rfloor}}\)

\(\newcommand{\Ceil}[1]{{\lceil{#1}\rceil}}\)

\(\newcommand{\Path}[1]{(#1)}\)

\(\)

\(%\newcommand{\Lg}{\lg}\)

\(\newcommand{\Lg}{\log_2}\)

\(\)

\(\newcommand{\BigOh}{O}\)

\(%\newcommand{\BigOh}{\mathcal{O}}\)

\(\newcommand{\Oh}[1]{\BigOh(#1)}\)

\(\)

\(\newcommand{\todo}[1]{\Red{\textbf{TO DO: #1}}}\)

\(\)

\(\newcommand{\NULL}{\textsf{null}}\)

\(\)

\(\newcommand{\Insert}{\ensuremath{\textsc{insert}}}\)

\(\newcommand{\Search}{\ensuremath{\textsc{search}}}\)

\(\newcommand{\Delete}{\ensuremath{\textsc{delete}}}\)

\(\newcommand{\Remove}{\ensuremath{\textsc{remove}}}\)

\(\)

\(\newcommand{\Parent}[1]{\mathop{parent}(#1)}\)

\(\)

\(\newcommand{\ALengthOf}[1]{{#1}.\textit{length}}\)

\(\)

\(\newcommand{\TRootOf}[1]{{#1}.\textit{root}}\)

\(\newcommand{\TLChildOf}[1]{{#1}.\textit{leftChild}}\)

\(\newcommand{\TRChildOf}[1]{{#1}.\textit{rightChild}}\)

\(\newcommand{\TNode}{x}\)

\(\newcommand{\TNodeI}{y}\)

\(\newcommand{\TKeyOf}[1]{{#1}.\textit{key}}\)

\(\)

\(\newcommand{\PEnqueue}[2]{{#1}.\textsf{enqueue}(#2)}\)

\(\newcommand{\PDequeue}[1]{{#1}.\textsf{dequeue}()}\)

\(\)

\(\newcommand{\Def}{\mathrel{:=}}\)

\(\)

\(\newcommand{\Eq}{\mathrel{=}}\)

\(\newcommand{\Asgn}{\mathrel{\leftarrow}}\)

\(%\newcommand{\Asgn}{\mathrel{:=}}\)

\(\)

\(%\)

\(% Heaps\)

\(%\)

\(\newcommand{\Downheap}{\textsc{downheap}}\)

\(\newcommand{\Upheap}{\textsc{upheap}}\)

\(\newcommand{\Makeheap}{\textsc{makeheap}}\)

\(\)

\(%\)

\(% Dynamic sets\)

\(%\)

\(\newcommand{\SInsert}[1]{\textsc{insert}(#1)}\)

\(\newcommand{\SSearch}[1]{\textsc{search}(#1)}\)

\(\newcommand{\SDelete}[1]{\textsc{delete}(#1)}\)

\(\newcommand{\SMin}{\textsc{min}()}\)

\(\newcommand{\SMax}{\textsc{max}()}\)

\(\newcommand{\SPredecessor}[1]{\textsc{predecessor}(#1)}\)

\(\newcommand{\SSuccessor}[1]{\textsc{successor}(#1)}\)

\(\)

\(%\)

\(% Union-find\)

\(%\)

\(\newcommand{\UFMS}[1]{\textsc{make-set}(#1)}\)

\(\newcommand{\UFFS}[1]{\textsc{find-set}(#1)}\)

\(\newcommand{\UFCompress}[1]{\textsc{find-and-compress}(#1)}\)

\(\newcommand{\UFUnion}[2]{\textsc{union}(#1,#2)}\)

\(\)

\(\)

\(%\)

\(% Graphs\)

\(%\)

\(\newcommand{\Verts}{V}\)

\(\newcommand{\Vtx}{v}\)

\(\newcommand{\VtxA}{v_1}\)

\(\newcommand{\VtxB}{v_2}\)

\(\newcommand{\VertsA}{V_\textup{A}}\)

\(\newcommand{\VertsB}{V_\textup{B}}\)

\(\newcommand{\Edges}{E}\)

\(\newcommand{\Edge}{e}\)

\(\newcommand{\NofV}{\Card{V}}\)

\(\newcommand{\NofE}{\Card{E}}\)

\(\newcommand{\Graph}{G}\)

\(\)

\(\newcommand{\SCC}{C}\)

\(\newcommand{\GraphSCC}{G^\text{SCC}}\)

\(\newcommand{\VertsSCC}{V^\text{SCC}}\)

\(\newcommand{\EdgesSCC}{E^\text{SCC}}\)

\(\)

\(\newcommand{\GraphT}{G^\text{T}}\)

\(%\newcommand{\VertsT}{V^\textup{T}}\)

\(\newcommand{\EdgesT}{E^\text{T}}\)

\(\)

\(%\)

\(% NP-completeness etc\)

\(%\)

\(\newcommand{\Poly}{\textbf{P}}\)

\(\newcommand{\NP}{\textbf{NP}}\)

\(\newcommand{\PSPACE}{\textbf{PSPACE}}\)

\(\newcommand{\EXPTIME}{\textbf{EXPTIME}}\)

Parallel for-loops

The parallel for-loop is a central construction in

parallel frameworks such as Scala parallel collection and OpenMP.

In pseudo-code, we can express a parallel for-loop as

parallel for \( i \) = 1 to \( n \):

\( f(i) \)

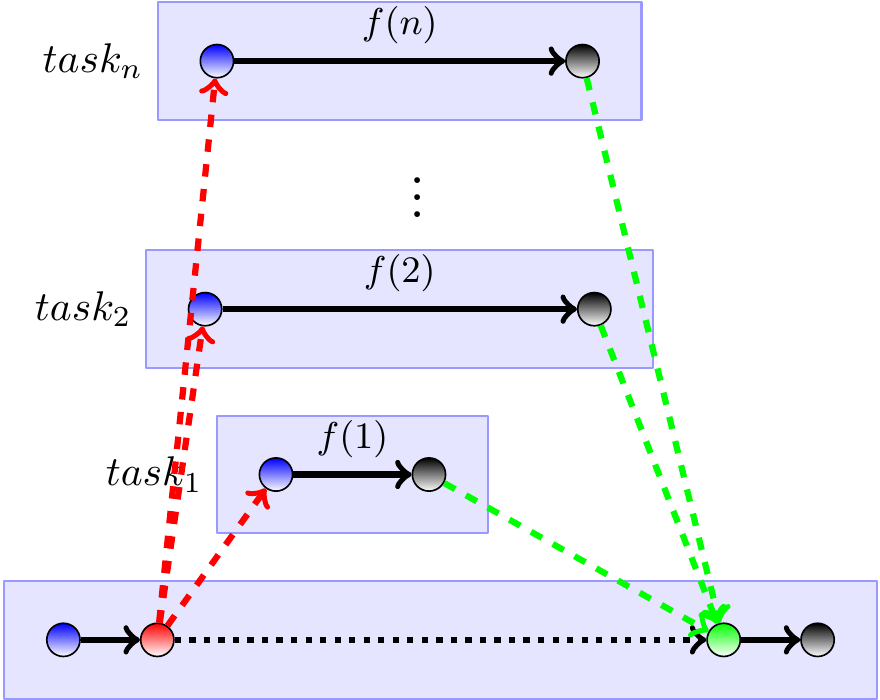

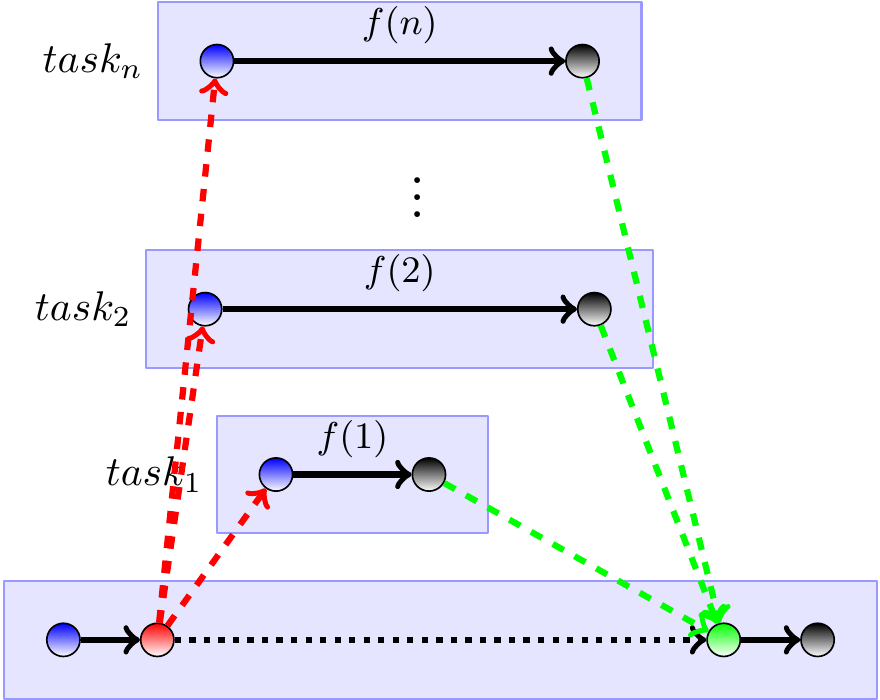

It simply computes the function \( f(i) \) for each \( i = 1,2,….,n \).

The computations are done in parallel in tasks.

The computations can start and end in an arbitrary order.

In an idealized setting,

each \( f(i) \) is executed in its own task and

there are enough threads to execute all of them at the same time.

Thus the ideal work and span of parallel for-loops are:

The work is the sum of the works of \( f(1),….,f(n) \).

The span is the maximum of the spans of \( f(1),….,f(n) \).

The time needed to create the parallel tasks computing

the values \( f(i) \) is usually ignored in the analysis.

The idealized computation DAG for a parallel for loop is

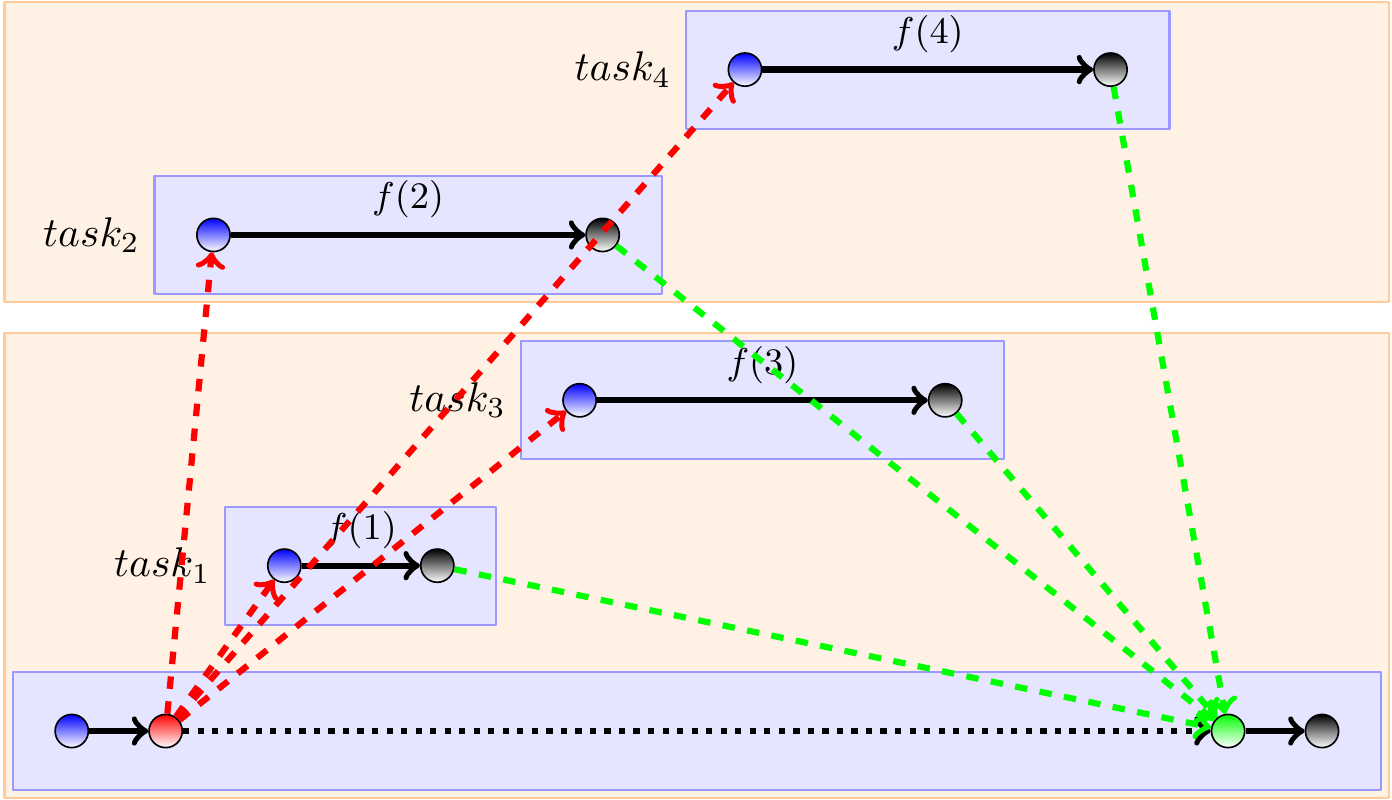

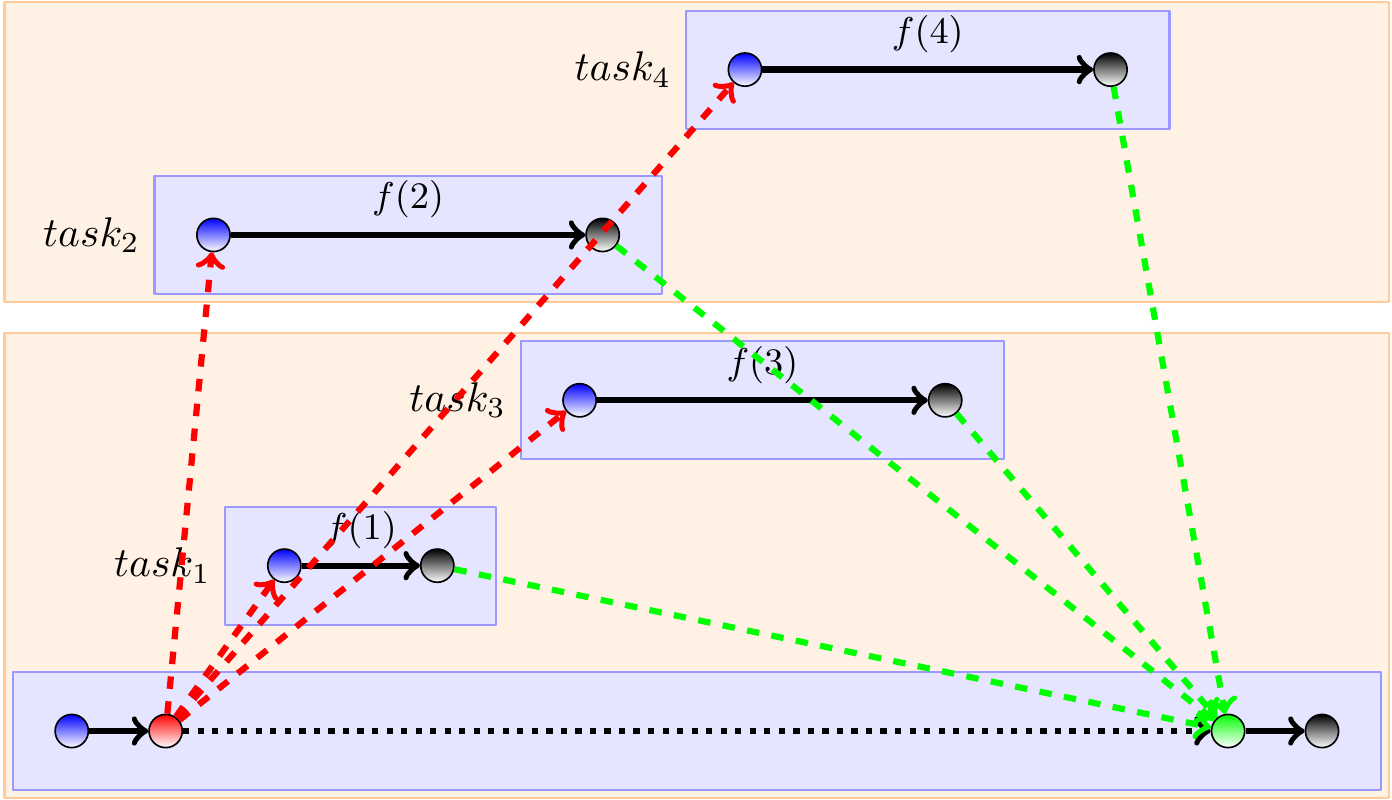

In a real setting,

when there are more tasks than available threads,

a scheduler will execute the tasks in some order in the available threads.

As an example, one possible computation DAG for executing the 4 tasks of

a parallel loop with two threads is

In addition, a parallel framework, such as Scala parallel collection,

may group several loop-body computations in a single tasks

to reduce task creation overhead.

To use parallel collection methods, such as for-loops, in Scala,

one can use the scala.collection.parallel classes.

For instance, the plain parallel for-loop construction

can be achieved easily with ParRange:

(0 until n).par.foreach(i => f(i))

This applies the function \( f(i) \) in parallel to each \( i = 0,…,n-1 \).

Again, the applications are done in parallel and

get finished in an arbitrary order:

scala> (0 until 20).par.foreach(i => print(s"$i "))

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

scala> (0 until 20).par.foreach(i => print(s"$i "))

0 15 1 2 3 10 11 12 13 14 17 18 19 16 4 5 6 7 8 9

Internally, the scala.collection.parallel uses

the Java fork-join framework to implement the parallel operations.

As shown above,

the bodies of a parallel for-loop are executed in parallel

and

in an arbitrary order.

To ensure determinism (that the same end result is always obtained),

the executions should be independent:

if one execution modifies some mutable structure (variables, array elements, mutable lists etc), then the other executions should not read or write the same structure.

In the previous example,

the loop bodies are not independent because

they write to the same console.