Greedy Algorithms¶

In the two last examples of dynamic programming, we had to consider many sub-problems to solve a problem. For instance, in the rodding cutting problem we had to consider all the possible ways of making the first cut. Similarly, in the LCS problem there were two potential ways for obtaining the optimal solution when the last symbols in the sequence were not the same.

However, sometimes it is possible to greedily select just one case and thus reduce the problem into one smaller sub-problem. We next consider one such simple greedy algorithm. In the graphs rounds, we’ll see other greedy algorithms when we study the minimum spanning tree algorithms.

Example IV: Activity-selection problem¶

Assume that we are given a finite set of activities \( \GActs = \Set{\GAct{1},…,\GAct{n}} \). Each activity \( \GAct{i} \) is associated with

a start time \( \GStart{i} \), and

a finish time \( \GFinish{i} \) with \( \GStart{i} < \GFinish{i} \).

An activity executes in the half-open interval \( [\GStart{i},\GFinish{i}) \). Two activities, \( \GAct{i} \) and \( \GAct{j} \) for \( i \neq j \), are said to be compatible if they do not execute at the same time, meaning that either \( \GFinish{i} \le \GStart{j} \) or \( \GFinish{j} \le \GStart{i} \). Our task is find a maximal compatible subset of \( \GActs \), i.e., a subset \( \GSol \subseteq \GActs \) of largest size such that all the activities in the set are mutually compatible.

Example

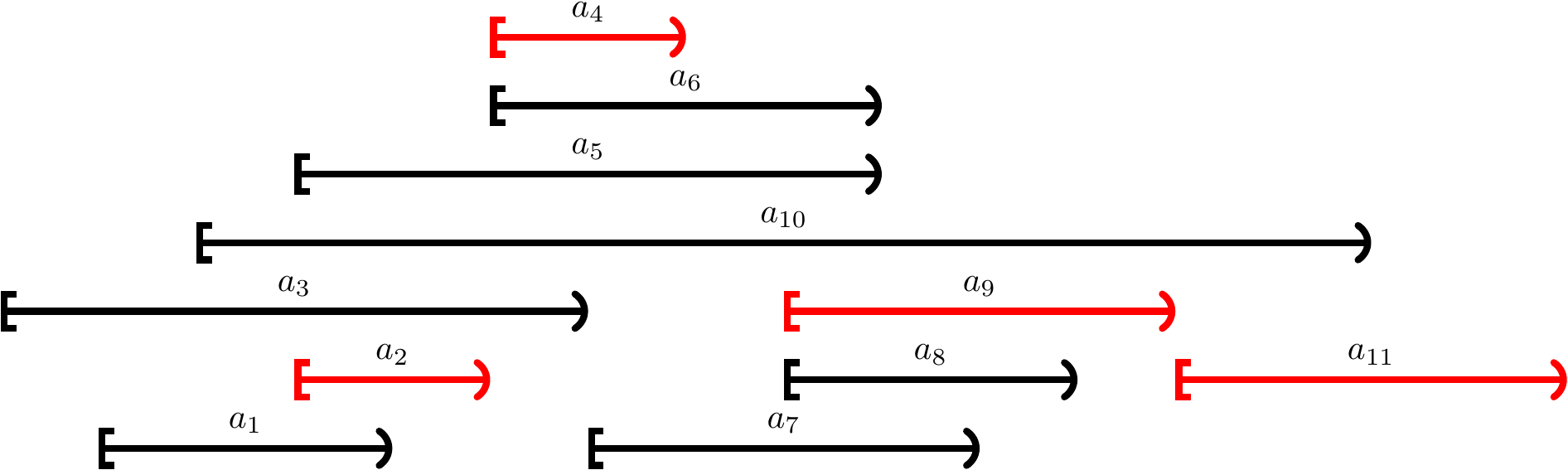

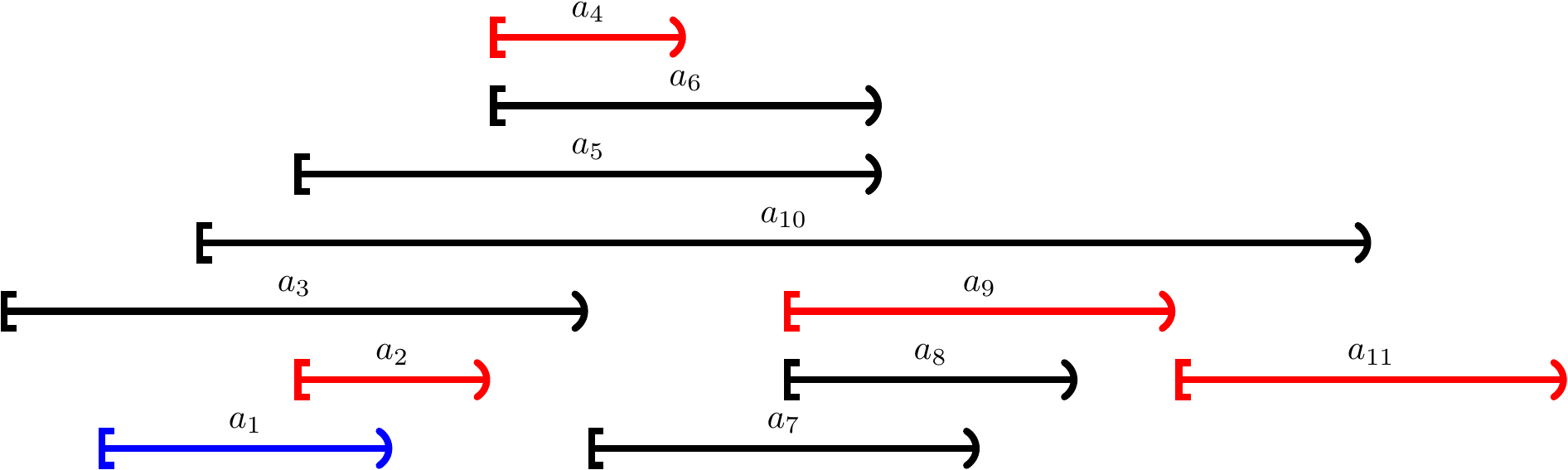

Consider the set of activities \( \GActs = \Set{\GAct{1},…,\GAct{11}} \) with $$\begin{array}{c|ccccccccccc} i & 1 & 2 & 3 & 4 & 5 & 6 & 7 & 8 & 9 & 10 & 11 \\ \hline \GStart{i} & 1 & 3 & 0 & 5 & 3 & 5 & 6 & 8 & 8 & 2 & 12 \\ \GFinish{i} & 4 & 5 & 6 & 7 & 9 & 9 & 10 & 11 & 12 & 14 & 16 \end{array}$$ A maximal compatible subset is \( \Set{\GAct{2},\GAct{4},\GAct{9},\GAct{11}} \).

A dynamic programming formulation¶

In order to relate dynamic programming and greedy algorithms, let us first see a dynamic programming approach for solving the activity selection problem.

Assume that the set of activities is \( \GActs = \Set{\GAct{1},…,\GAct{n}} \). For obtaining the recursive definition for optimal solutions, let $$\GActss{l}{u} = \Setdef{\GAct{i}\in\GActs}{l \le \GStart{i} \land \GFinish{i} \le u}$$ be the set of activities that start at \( l \) or later and finish at latest at \( u \). If we use \( L = \min_{i \in [1..n]}{\GStart{i}} \) and \( U = \max_{i \in [1..n]}{\GFinish{i}} \), then the original problem instance \( \GActs \) is \( \GActss{L}{U} \).

Each (sub-)problem on \( \GActss{l}{u} \) has the optimal sub-solution property:

Take any activity \( \GAct{i} \in \GActss{l}{u} \)

Let \( \GSols{l}{\GStart{i}} \) and \( \GSols{\GFinish{i}}{u} \) be any maximal compatible subsets for the sets \( \GActss{l}{\GStart{i}} \) of activities in \( \GActss{l}{u} \) ending before \( \GAct{i} \) and \( \GActss{\GFinish{i}}{u} \) of activities in \( \GActss{l}{u} \) starting after \( \GAct{i} \).

Then \( \GSolsi{l}{u}{i} = \GSols{l}{\GStart{i}} \cup \Set{\GAct{i}} \cup \GSols{\GFinish{i}}{u} \) is a maximal compatible subset for \( \GActss{l}{u} \) that contains \( \GAct{i} \).

Thus a maximal compatible subset for \( \GActss{l}{u} \) is $$\GSols{l}{u} = \max_{\GAct{j} \in \GActss{l}{u}} \GSolsi{l}{u}{j}.$$ The solution for the original problem is \( \GSols{L}{U} \). We now have a recursive definition that can be turned into a dynamic programming algorithm. Note that the number of sub-problems \( \GActss{l}{u} \) one has to consider is at most \( (n+1) \times (n+1) \) because (i) the start index \( l \) of each \( \GActss{l}{u} \) is either \( L \) or an ending time of an activity, and (ii) the ending index \( u \) of each \( \GActss{l}{u} \) is either \( U \) or a starting time of an activity. Thus the dynamic programming approach can be made to work in polynomial time. On the contrary, the straighforward approach of

enumerating all the subsets of \( \GActs \),

checking the compatibility of each, and

remembering a largest compatible one

would require at least exponential time as there are exponetially many subsets.

In Scala, we can implement the dynamic programming algorithm as follows. Note that the implementation is not very well optimized.

def activitySelectorDynprog(acts: Set[(Int,Int)]) = {

require(acts.forall(a => a._1 >= 0 && a._1 < a._2))

val mem = collection.mutable.Map[Set[(Int,Int)],Set[(Int,Int)]]()

def inner(current: Set[(Int,Int)]): Set[(Int,Int)] = mem.getOrElseUpdate(current, {

var best = Set[(Int,Int)]()

for(act <- current) {

val earlier = current.filter(a => a._2 <= act._1)

val later = current.filter(a => act._2 <= a._1)

val earlierResult = inner(earlier)

val laterResult = inner(later)

if(earlierResult.size + 1 + laterResult.size > best.size)

best = (earlierResult ++ laterResult) + act

}

best

})

inner(acts)

}

Running our example with it, we get:

scala> val acts = Set((1,4),(3,5),(0,6),(5,7),(3,9),(5,9),(6,10),(8,11),(8,12),(2,14),(12,16))

acts: ....Set[(Int, Int)] = Set((3,9), (6,10), (5,9), (8,11), (3,5), (1,4), (12,16), (5,7), (2,14), (8,12), (0,6))

scala> activitySelectorDynprog(acts).toVector.sortBy(_._1)

res0: ....Vector[(Int, Int)] = Vector((3,5), (5,7), (8,11), (12,16))

The greedy approach¶

The activity selection problem has a property that we can use to obtain an even faster and simpler algorithm. Namely, we do not have to consider all the activities in a subset but can

just select one that finishes as early as possible, and

then, consider the sub-problem obtained by removing that activity and all the activities that are not compatible with it.

This optimization is based on the observation that if we have a maximal compatible subset that does not contain an activity \( \GAct{i} \) that finishes as early as possible, we can substitute one that is in the set with \( \GAct{i} \) and obtain a compatible subset of the same maximal size. This is formalized in the following theorem.

Theorem

Let \( \GSol \subseteq \GActs \) be a maximal subset of compatible activities, \( \GAct{i} \) an activity with the earliest finish time among those in \( \GActs \), and \( \GAct{j} \) be the activity with the earliest finish time among those in \( \GSol \). Then \( (\GSol \setminus \Set{\GAct{j}}) \cup \Set{\GAct{i}} \) is also a maximal subset of compatible activities.

Proof

If \( i = j \), we are done.

Otherwise, it must be that \( \GFinish{i} \le \GFinish{j} \). As \( \GAct{j} \) is the activity with the earliest finish time among those in \( \GSol \), \( \GSol \) does not contain any \( \GAct{k} \), \( k \neq j \), with \( \GStart{k} \le \GFinish{i} \). Therefore, it is safe to substitute \( \GAct{j} \) with \( \GAct{i} \) in \( \GSol \) and obtain a set of compatible activities that has the same maximal size.

Example

Consider again the activities \( \GActs = \Set{\GAct{1},…,\GAct{11}} \), where $$\begin{array}{c|ccccccccccc} i & 1 & 2 & 3 & 4 & 5 & 6 & 7 & 8 & 9 & 10 & 11 \\ \hline \GStart{i} & 1 & 3 & 0 & 5 & 3 & 5 & 6 & 8 & 8 & 2 & 12 \\ \GFinish{i} & 4 & 5 & 6 & 7 & 9 & 9 & 10 & 11 & 12 & 14 & 16 \end{array}$$ One maximal compatible subset is \( \Set{\GAct{2},\GAct{4},\GAct{9},\GAct{11}} \). It does not contain the activity \( \GAct{1} \) with the earliest finish time among those in \( \GActs \). If we substitute the activity \( \GAct{2} \) having the earliest finish time among those in \( \GActs \) with the activity \( \GAct{1} \), the result is the maximal compatible subset \( \Set{\GAct{1},\GAct{4},\GAct{9},\GAct{11}} \).

Observe that \( \Set{\GAct{4},\GAct{9},\GAct{11}} \) is a maximal compatible subset of the “reduced” sub-problem \( \Set{\GAct{4},\GAct{6},\GAct{7},\GAct{8},\GAct{9},\GAct{11}} \) obtained by removing \( \GAct{1} \) and all the activities that are not compatible with it.

In Scala, we can implement the greedy algorithm as follows:

def activitySelector(acts: Set[(Int,Int)]) = {

require(acts.forall(a => a._1 >= 0 && a._1 < a._2))

val sorted = acts.toVector.sortBy(_._2)

val sol = collection.mutable.ArrayBuffer[(Int,Int)]()

var time = Int.MinValue

for(act <- sorted) {

if(act._1 >= time) {

sol += act

time = act._2

}

}

sol.toSet

}

Running our example with it, we get:

scala> val acts = Set((1,4),(3,5),(0,6),(5,7),(3,9),(5,9),(6,10),(8,11),(8,12),(2,14),(12,16))

acts: ....Set[(Int, Int)] = Set((3,9), (6,10), (5,9), (8,11), (3,5), (1,4), (12,16), (5,7), (2,14), (8,12), (0,6))

scala> activitySelector(acts).toVector.sortBy(_._1)

res0: ....Vector[(Int, Int)] = Vector((1,4), (5,7), (8,11), (12,16))